Sina Masnadi

I received my Ph.D. in Computer Science from University of Central Florida in August 2022, with a thesis titled "Distance Perception Through Head-Mounted Displays". During my graduate school, I worked with Dr. Joseph LaViola, at the Interactive Computing Experiences Research Cluster (ICE).

Education

University of Central Florida

Sharif University of Technology

Publications

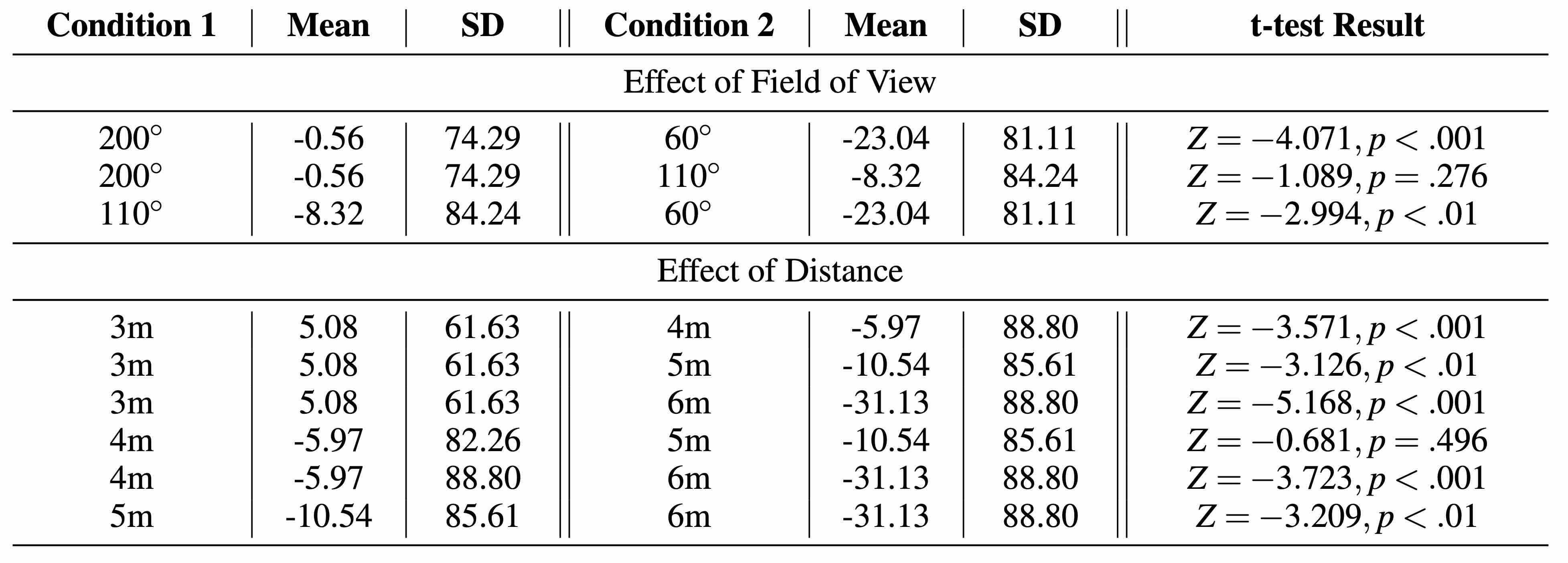

Effects of Field of View on Egocentric Distance Perception in Virtual Reality

Distance Perception with a Video See-Through Head-Mounted Display

Field of View Effect on Distance Perception in Virtual Reality

ConcurrentHull: A Fast Parallel Computing Approach to the Convex Hull Problem

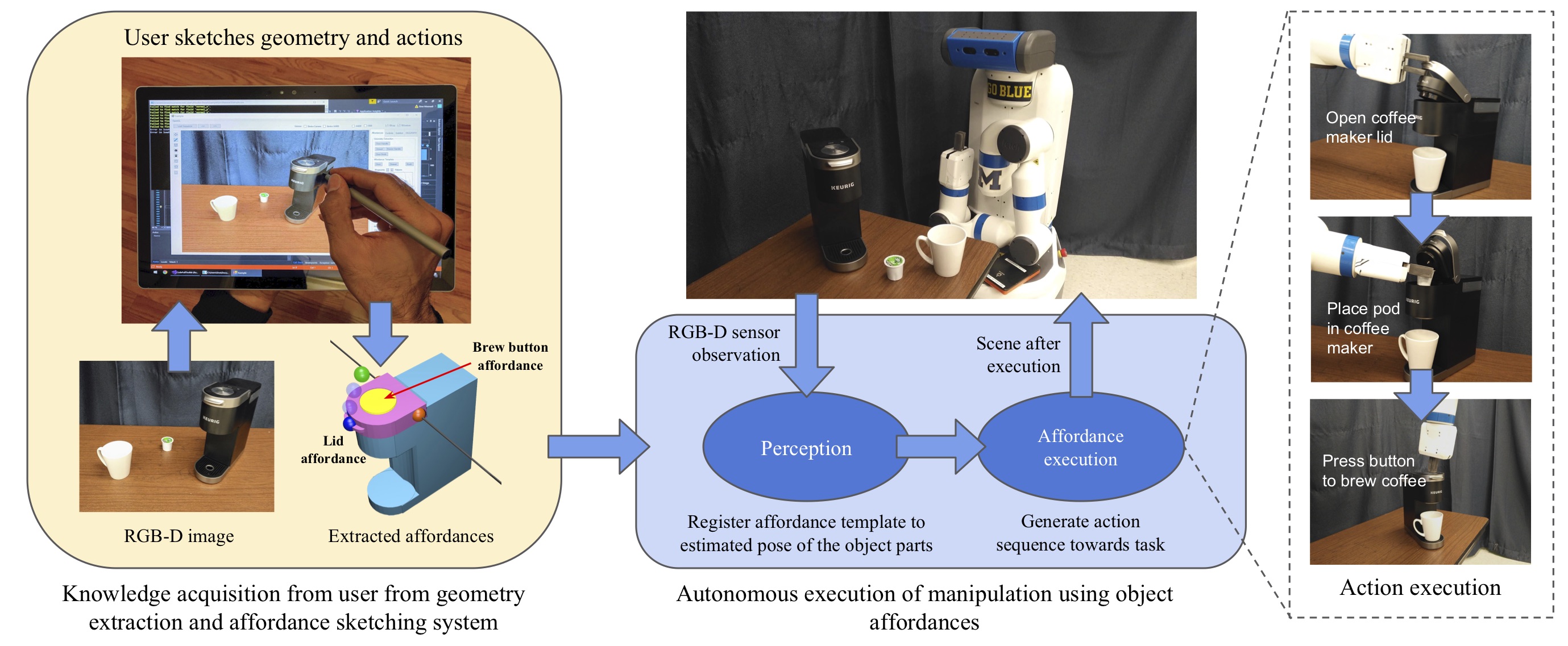

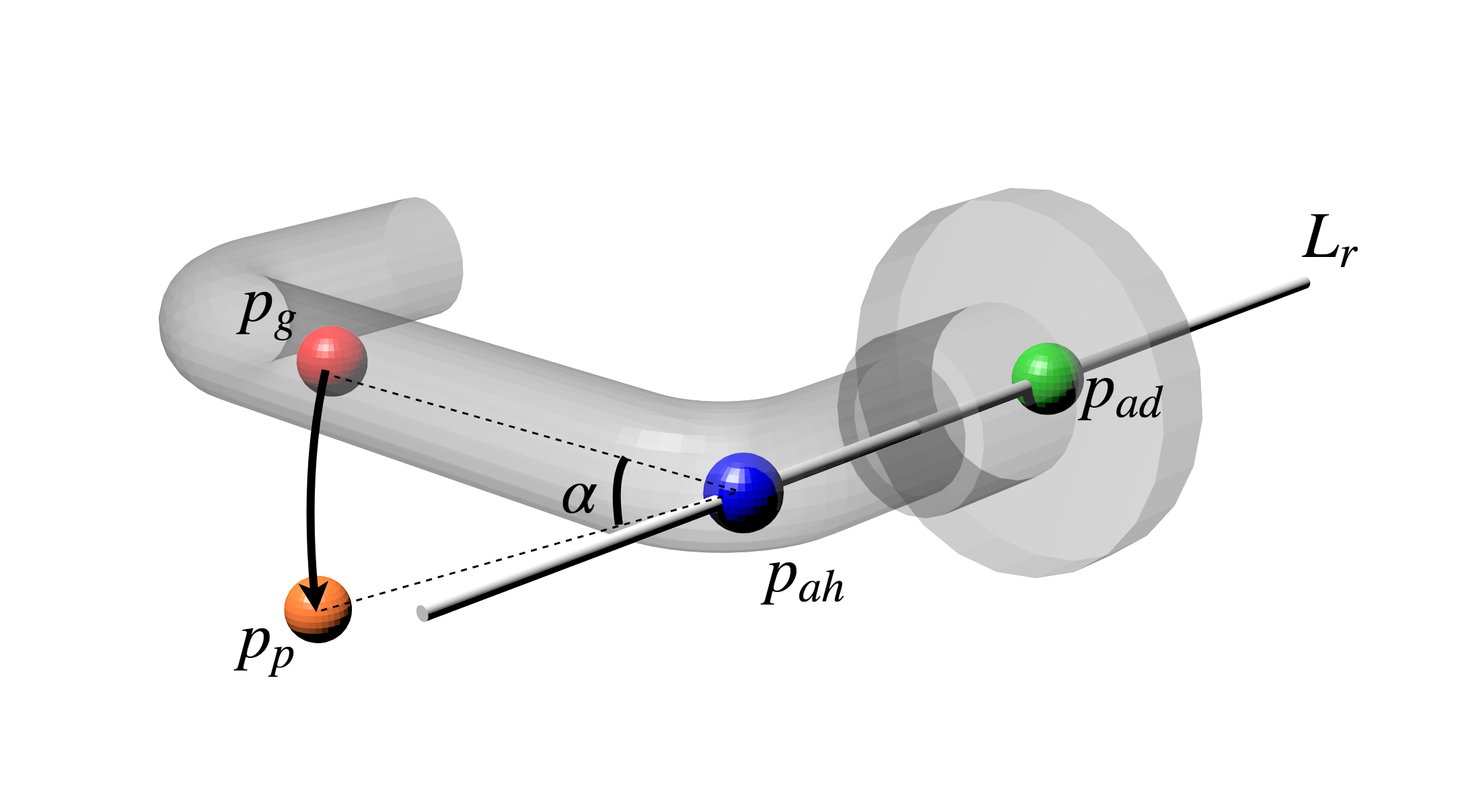

Sketching affordances for human-in-the-loop robotic manipulation tasks

VRiAssist: An Eye-Tracked Virtual Reality Low Vision Assistance Tool

AffordIt!: A Tool for Authoring Object Component Behavior in VR

A Sketch-Based System for Human-Guided Constrained Object Manipulation

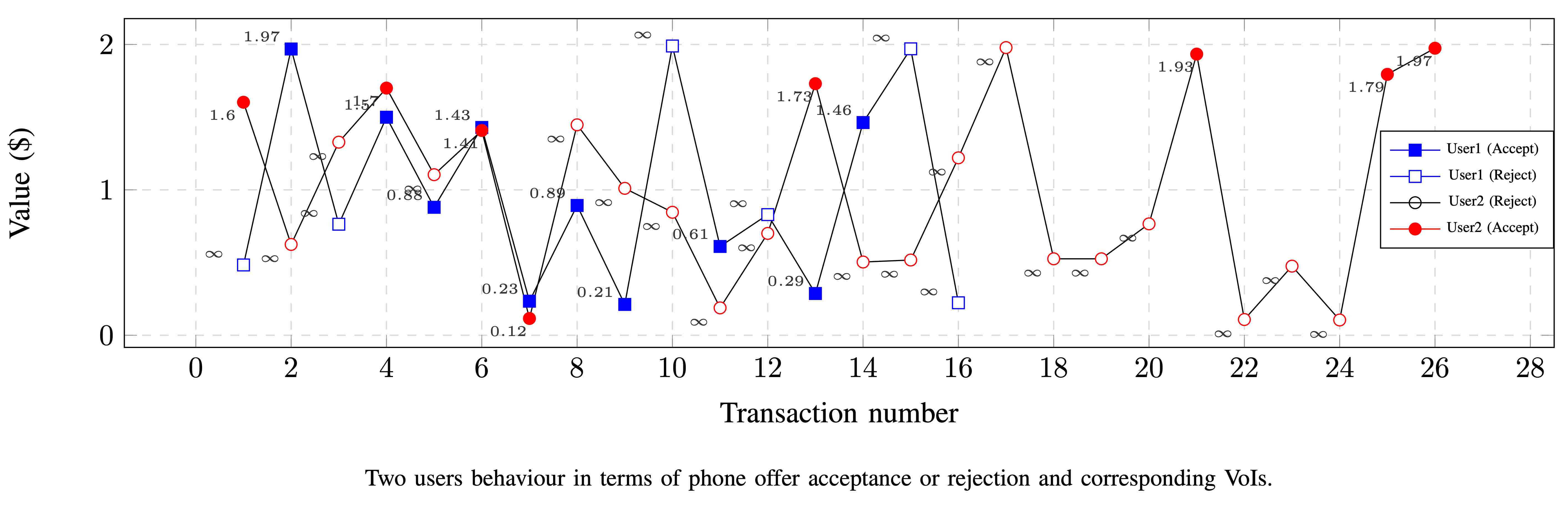

Investigating the Value of Privacy within the Internet of Things

Experience

Senior Software Engineer

At Magic Leap, I leverage Unity to design and implement comprehensive user studies aimed at quantifying user experience in augmented reality. My work involves developing interactive AR applications and conducting user studies to gather data, which is then analyzed to enhance usability and overall user satisfaction. This role combines technical expertise in software development with a deep understanding of human-computer interaction, focusing on creating immersive and intuitive AR devices.

Research Scienctist

Developed robotic systems for household and industrial applications using state-of-the-art machine learning and artificial intelligence approaches.

Full-stack Developer

Developed web-services using Node.js, MongoDB, Heroku, AWS, and Angular JS. Among my responsibilities, I was in charge of transforming the traditional servers to cloud services using Node.js and AWS (Lambda, S3, and EC2). I also helped with Android app development by integrating Google Firebase services for user profile management.

Senior Android Developer

Cafe Bazaar is the largest private mobile software company in Iran. I worked on its main product, called "Bazaar", which is an Android application marketplace (similar to Google Play) for Iranian smartphone users. Currently, it has more than 36 million active users. Some of my collaborations in this company are as follows:

- UI/UX design based on persona, scenario, and goal

- Improving UX using user study, A/B testing and data analytics

- Creating an Android image caching system (this happened before Fresco, UIL, Picasso, and other libraries become popular)

- Implementing root installation for apps. Automatic update of installed apps which mimics Google Play and App Store

- Design and implement server/client data transfer protocols and structures

- Collaborating in implementing apk delta update (Update Android apps by their diff)

Creator

An Android wallpaper application with more than 300,000 active users at its peak.